Full-Body Interaction Lab

Context:

Project developed at the Full-Body Interaction Lab of Universitat Pompeu Fabra, where I conducted my Master’s thesis as part of the Master in Intelligent Interactive Systems.

Technologies:

Mixed Reality · Full-Body Interaction · Computer Vision · Deep Learning · Python · C# · Unity

Description:

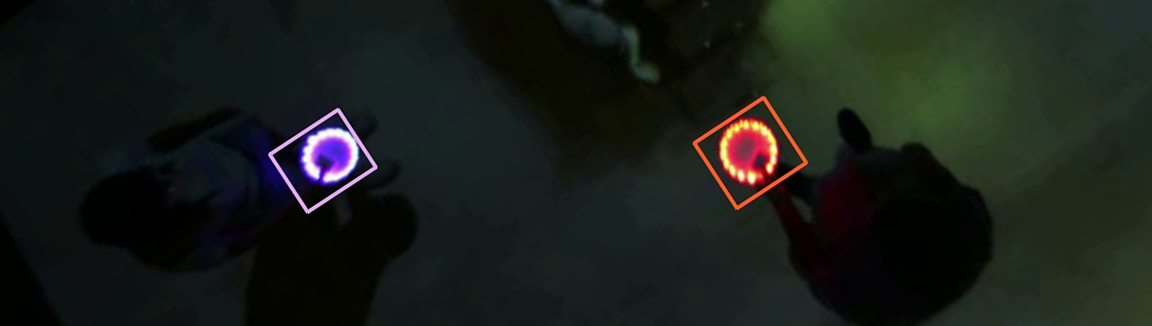

The Full-Body Interaction Lab has a mixed-rality interactive installation composed of two projectors that display virtual environments and four cameras that track users' movements within the space. One of the main applications of this system is a series of Unity-based games designed to study social interaction between children with and without autism spectrum disorder. For years, the tracking system worked with traditional computer vision methods, new games led to difficulties in its performance, so it needed to be improved.

My Master's thesis focused on redesigning the tracking system using Deep Learning methods. This task presented several challenges, including the need of real-time performance, accurate user distinction, and seamless integration with Unity.

The proposed pipeline consisted of:

- Image preprocessing

- Real-time object detection using the YOLO (You Only Look Once) neural network

- A tracking module based on a Linear Kalman Filter

Lab's Website upf.edu/web/fubintlab